June 12 mark two years of us using Amazon Simple Workflow Service (SWF) in production, and I thought I’d share the experience.

First, let’s get this out of the way:

What is SWF not?

- SWF does not execute any code.

- SWF does not contain the logic of the workflow.

- SWF does not allow you to draw a workflow or a state machine.

So what is it?

SWF is a web service that keeps the state of your workflow.

That’s pretty much it.

What are we using it for?

Our project is based on C#. We are using the AWS API directly (using the .Net SDK).

If you are using Java Or Ruby amazon provider a higher level library for SWF called Flow Framework. For C#, I wrote what I needed myself, or simply used the “low level” API.

Out project processes a large number of files daily, and it was my task to convert our previous batch-based solution to SWF.

How does it work?

SWF is based on polling. Your code runs on your machines on AWS or on-premises – it doesn’t matter. Your code is polling for tasks from the SWF API (where they wait in queues), receives a task, executes it, and sends the result back to the SWF API.

SWF then issues new tasks to your code, and keeps the history of the workflow (state).

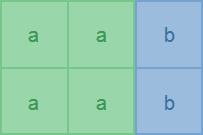

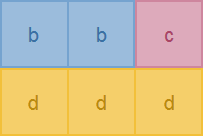

If you’ve read any of the documentation, you probably know there are two kind of tasks: Activity Tasks (processed by workers), and Decision Tasks (process by The Decider). This API naturally encourages and leads you to a nice design of your software, where different components do different things.

Workers

Workers handle Activity Tasks.

Workers are simple components that actually do the work of the workflow. These are the building blocks of the workflow, and typically do one simple thing:

- Take an S3 path as input and calculate the hash of the file.

- Add a row to the database.

- Send an email.

- Take a S3 path to an image and create a thumbnail.

- …

All of my workers implement a simple interface:

public interface IWorker

{

Task Process(TInput input);

}

An important property of workers is that all the data it needs to perform its task is included in its input.

The Decider

When I first read about SWF I had a concept of tiny workers and deciders working together like ants to achieve a greater goal. Would that it were so simple.

While workers are simple, each type of workflow has a decider with this operation:

- Poll for a decision task.

- Receive a decision task with all new events since the previous decision task.

- Optically load the entire workflow history to get context.

- Make multiple decisions based on all new events.

For a simple linear workflow this isn’t a problem. The decider code is typically:

if workflow started

Schedule activity task A

else if activity task A finished

Schedule activity task B

else if activity task B finished

Schedule activity task C

else if activity task C finished

Complete workflow execution.

However, when the logic of the workflow is complicated a decider may be required to handle this event:

Since the previous decision, Activity Task A completed successfully with this result, Activity Task B failed, we wouldn’t start the child workflow you’ve requested because you were rate limited, oh, and that timer you’ve set up yesterday finally went off.

This is a pretty complicated scenario. The Decider has just one chance of reacting to these events, and they all come at the same time. There are certainly many approaches here, but either way the decider is a hairy piece of code.

Code Design

As I’ve mentioned earlier, my workers are simple, and don’t use the SWF API directly – I have another class to wrap an IWorker. This is a big benefit because any programmer can write a worked (without knowing anything of SWF), and because it is easy to reuse the code in any context. When the worker fails I expect it to simply throw an exception – my wrapper class registers the exception as an activity task failure.

To make writing complicated deciders easier I’ve implemented helper functions to get the history of the workflow, parse it, and make new decisions. My decider is separated to a base class that uses the SWF API, and child classes (one for each workflow type) that accept the workflow history and return new decisions. My deciders do not connect to a database or any external resource, and have no side-effects (excepts logs and the SWF API, of course). This allows me to easily unit-test the decider logic – I can record a workflow history at a certain point to JSON, feed it to the decider, and see what decisions it makes. I can also tweak the history to make more test cases easily. These tests are important to me because the decided can contain a lot of logic.

Scalability

In either case, for deciders and for workers, I keep zero state in the class instance. All state comes from the workflow history in the decider’s case, and task input in the worker’s case. There is no communication between threads and no shared memory. This approach makes writing scalable programs trivial: there are no locks and no race conditions. I can have as many processes running in as many machines as I’d like, and it just works – there is no stage of discovery or balancing. As a proof of concept, I even ran some of the treads in Linux (using Mono), and it all worked seamlessly.

Retries

The Flow Framework has built-in retries, but it only took me a few hours to implement retries to failed activity tasks, and a few more hours to add exponential backoff. This works nicely – the worker doesn’t know anything. The decider schedules another activity tasks or fails the workflow. The retry will wait a few minutes, and may run in another server. This does prove itself, and many errors are easily resolved.

Timeouts

SWF has many types of timeouts, and I’ve decided early on that I would use them everywhere. Even on manual steps we have timeouts of a few days.

Timeouts are important. They are the only way the workflow can detect a stuck worker or decider tasks because a process crashed. They also encourage you to think about your business process a little better – what does it mean when it takes four days to handle a request? Can we do something automatically?

Another good (?) property of timeouts is that timeouts can purge your queues when the volume gets too high for your solution.

Integration with other AWS services

Lambda

SWF can execute an AWS Lambda instead of an activity task, which is a compelling idea. It saves the trouble of writing the worker, polling for tasks, and reduces the overhead of a large number of threads and open connections. In the simple worker examples I gave above, all of them can be written as Lambda functions (except maybe adding a database row, depending on your database and architecture). The combination of Lambda serverless execution and SWF state-fullness can make a robust, and trivially scalabale system.

But – while you can use Lambda to replace your workers, you still need to implement a decider the uses the API, and runs as a process in your servers. This is a shame. Decision tasks are quick and self contained, and deciders can easily be implemented as Lambda functions – if they didn’t have to poll for tasks.

I predict Amazon are going to add this feature: allowing AWS Lambda to work as a decider is a logical next step, and can make SWF a lot more appealing.

CloudWatch

CloudWatch show accumulated metadata about your workflows and activity tasks. For example, this chart shows the server CPU (blue) and executions of an Activity Task (orange):

This is nice for seeing exclusion time and how the system handles large volumes. The downside is that while it should accumulated data – there is no drill-down. I can clearly see 25 “look for cats in images” workflows failed, but there is no way of actually seeing them. More on that below.

What can be better

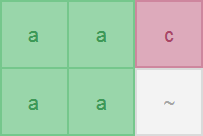

Rate Limiting and Throttling

More specifically, limiting number of operations per second. I don’t get rate limiting. Mostly, rate limiting feels like this:

I understand rate limiting can be useful, and it’s a good option when faulty code is running amok. However, even when I just started it felt like the SWF rate limiting was too trigger-happy. As a quick example – if I have a workflow that is setting a timer, and I start that workflow several hundreds of times, some workflow will fail setting the timer because of a rate limit. I then have to ask for a timer again and again until I succeed. I can’t even wait before asking for a timer again because, well, waiting means setting a timer… (to add insult to injury, the request to set the time is removed from the history, so I can’t really know exactly which timer failed)

For this reason when I’ve implemented exponential backoff between failures I didn’t use timers at all – I used a dummy activity task with a short schedule-to-start timeout. Activity tasks are not rate-limited per time (looking at the list again – this statement doesn’t look accurate, but that list wasn’t public at the time).

I just don’t get the point. The result isn’t better for Amazon or for the programmers. I understand the motive behind rate limiting, but it should be better tuned.

SWF Monitoring API

The API used for searching workflows is very limiting. A few examples:

- Find all workflows of type Customer Request – Supported.

- Find all failed workflows – Supported.

- Find all failed workflows of type Customer Request – Not supported.

- Find all workflows that used the “Send SMS” activity task – Nope.

- Find the 6 workflows where the “Send SMS” activity task timed out – No way, not even close.

This can get frustrating. CloudWatch can happily report 406 workflows used the “Send SMS” activity task between 13:00 and 13:05, and 4 activity tasks failed. There is no way of finding these workflows.

So sure, it isn’t difficult to implement it myself (we do have logs), but a feature like this is missing.

The AWS Console

The AWS management console in poor in general. The UI is dated and riddled with small bugs and oversights: JavaScript based links do not allow middle-clicking, bugs when the workflow history is too big, or missing links where they are obvious, like clicking on RunId of parent or child workflow, number of decision task should link to that decision, link from queue name can count of pending tasks, etc.

And of course, the console is using the API, so everything the API cannot do, the console can’t either.

Working with the console leaves a lot to be desired.

Community

There is virtually no noteworthy discussion on SWF. I’m not sure that’s important.

Conclusion

While SWF has its quirks, I am confident and happy with our solution.

2018 Update

An important comment is that SWF doesn’t seem to be in active development. From the FAQs – When should I use Amazon SWF vs. AWS Step Functions?:

AWS customers should consider using Step Functions for new applications. If Step Functions does not fit your needs, then you should consider Amazon Simple Workflow (SWF).

…

AWS will continue to provide the Amazon SWF service, Flow framework, and support all Amazon SWF customers

So it still work, and our code still works, but SWF is not getting any new features. This is certainly something to consider when choosing a major component in your system.

What is better in 2018 is visibility into of rate limits: There are CloudWatch metrics that show you your limit, usage, and throttled events, and there is a structured support form for increasing the rate limits.

Even after a month the fox and flag had consistent results!

Even after a month the fox and flag had consistent results!

Thanks!

Thanks!